XALON Tools™

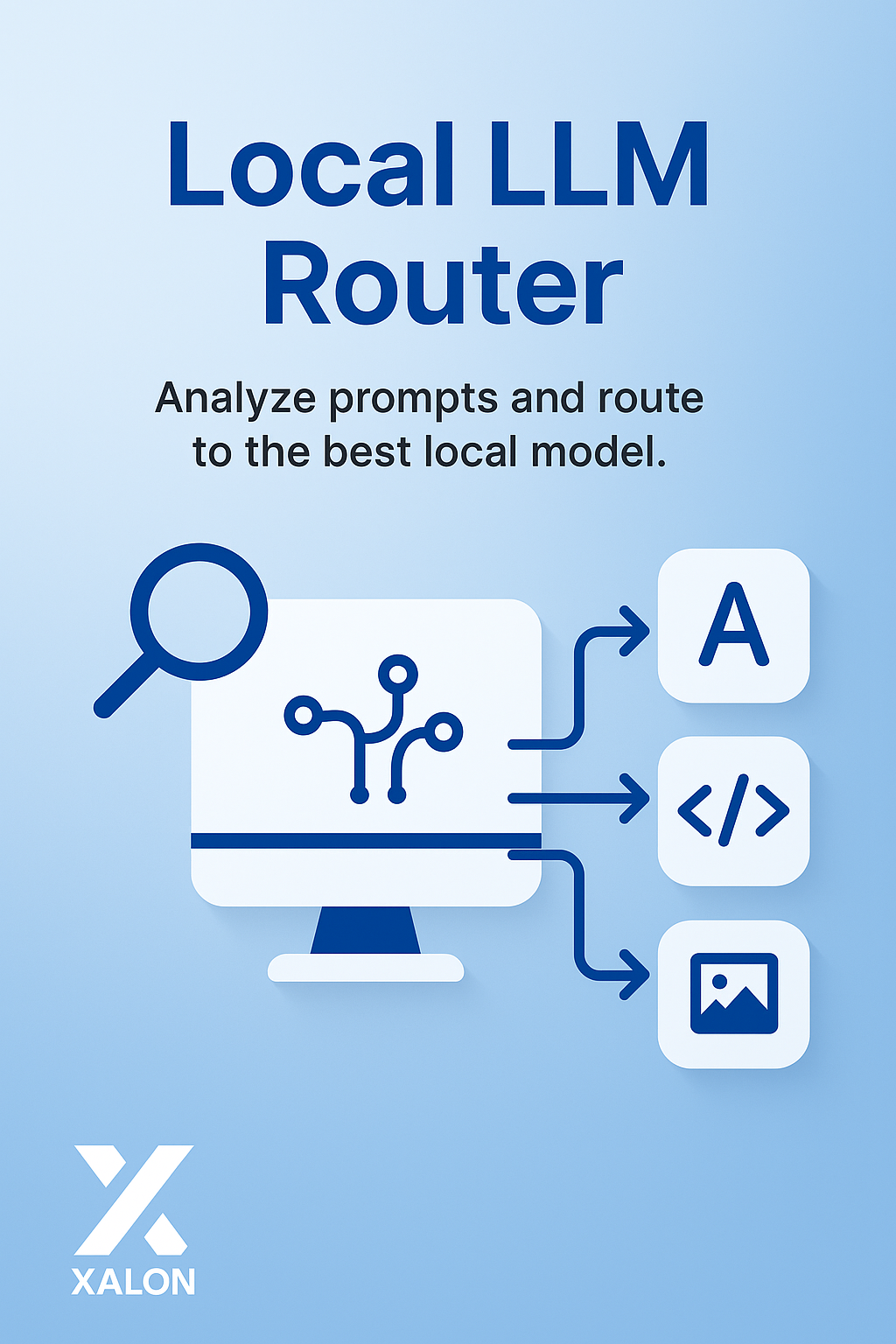

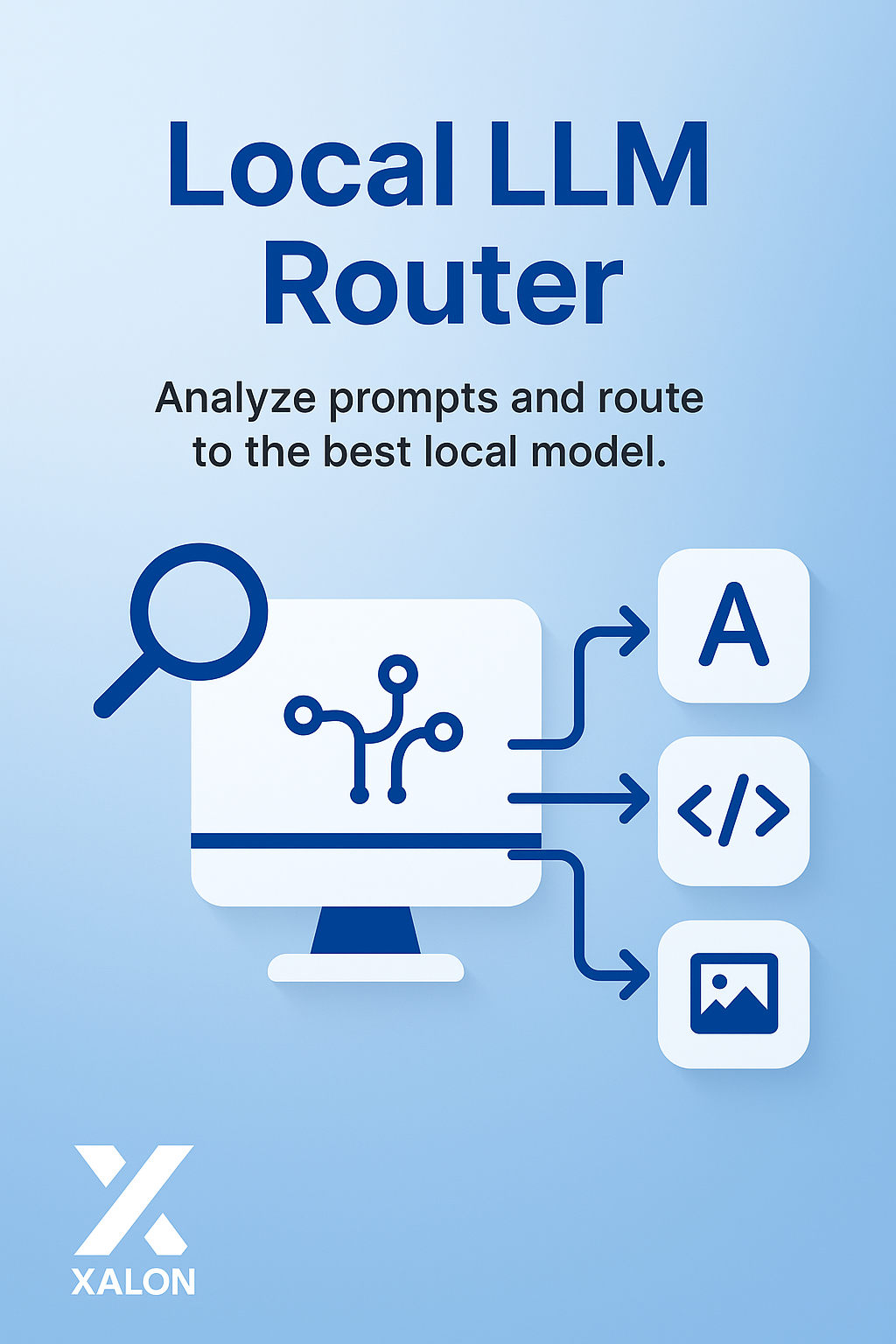

Private and Local Ollama Self Hosted Dynamic LLM Router

Private and Local Ollama Self Hosted Dynamic LLM Router

Couldn't load pickup availability

🧠 Power up your local AI stack with smart model routing!

This automation turns your Ollama setup into an intelligent local LLM router — analyzing user prompts in real time and selecting the best specialized model for each task, all without sending a single byte to the cloud.

Perfect for AI builders, developers, or anyone who values privacy, this workflow ensures you get the right model every time — with zero manual switching.

What it does:

🔍 Detects the intent of each user prompt automatically

🧭 Routes requests to the best-suited local Ollama model (text, code, or vision)

🤖 Supports popular models like phi4, llama3.2, qwen2.5-coder, granite3.2-vision

🗣️ Maintains memory for consistent conversations

🔐 Runs entirely on your machine — 100% private

✅ Easy setup with Ollama + n8n integration

🔧 Fully customizable model logic and routing rules

Need help setting it up? We offer full configuration and testing for a one-time fee.