XALON Tools™

Evaluation Metric: Summarization

Evaluation Metric: Summarization

Couldn't load pickup availability

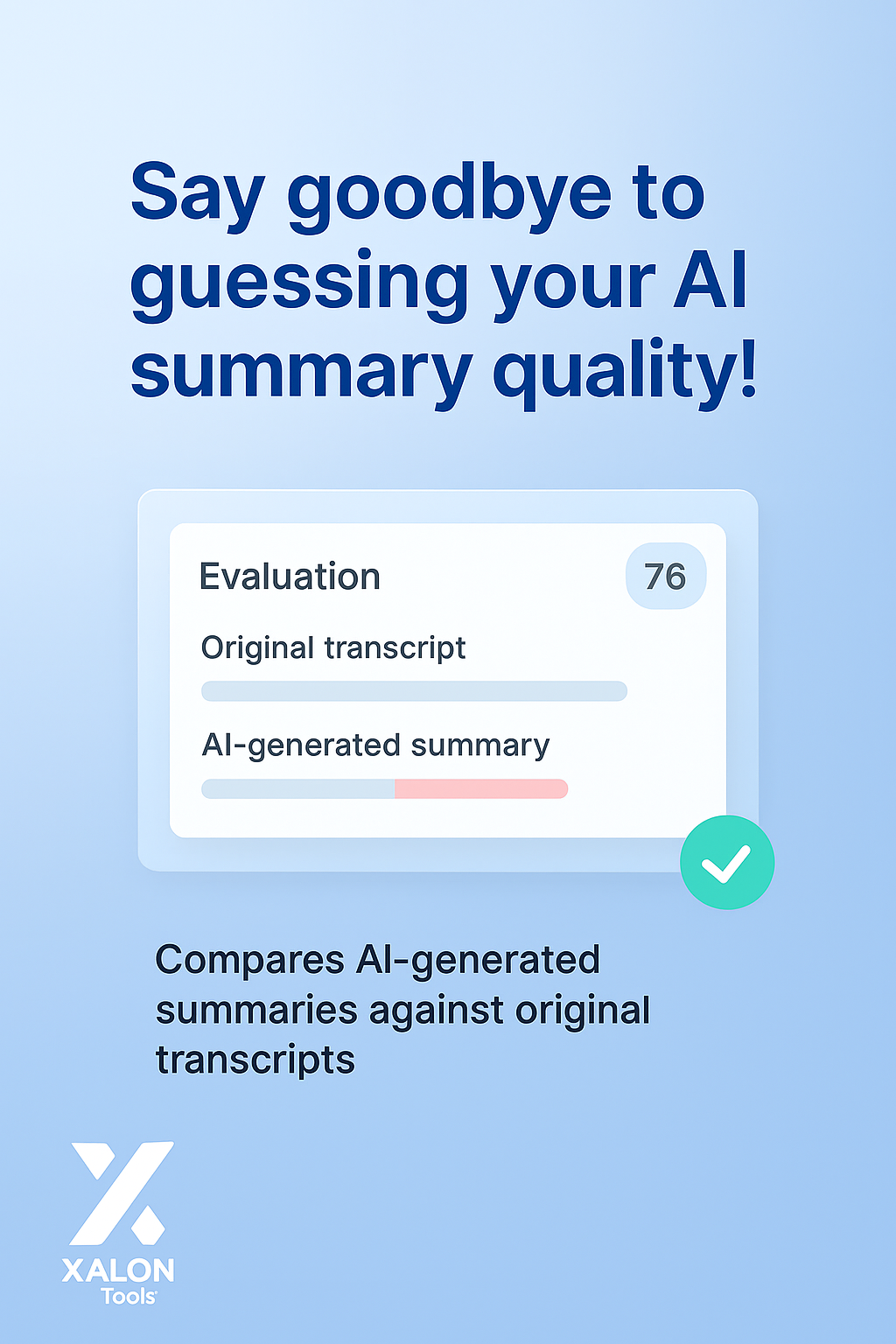

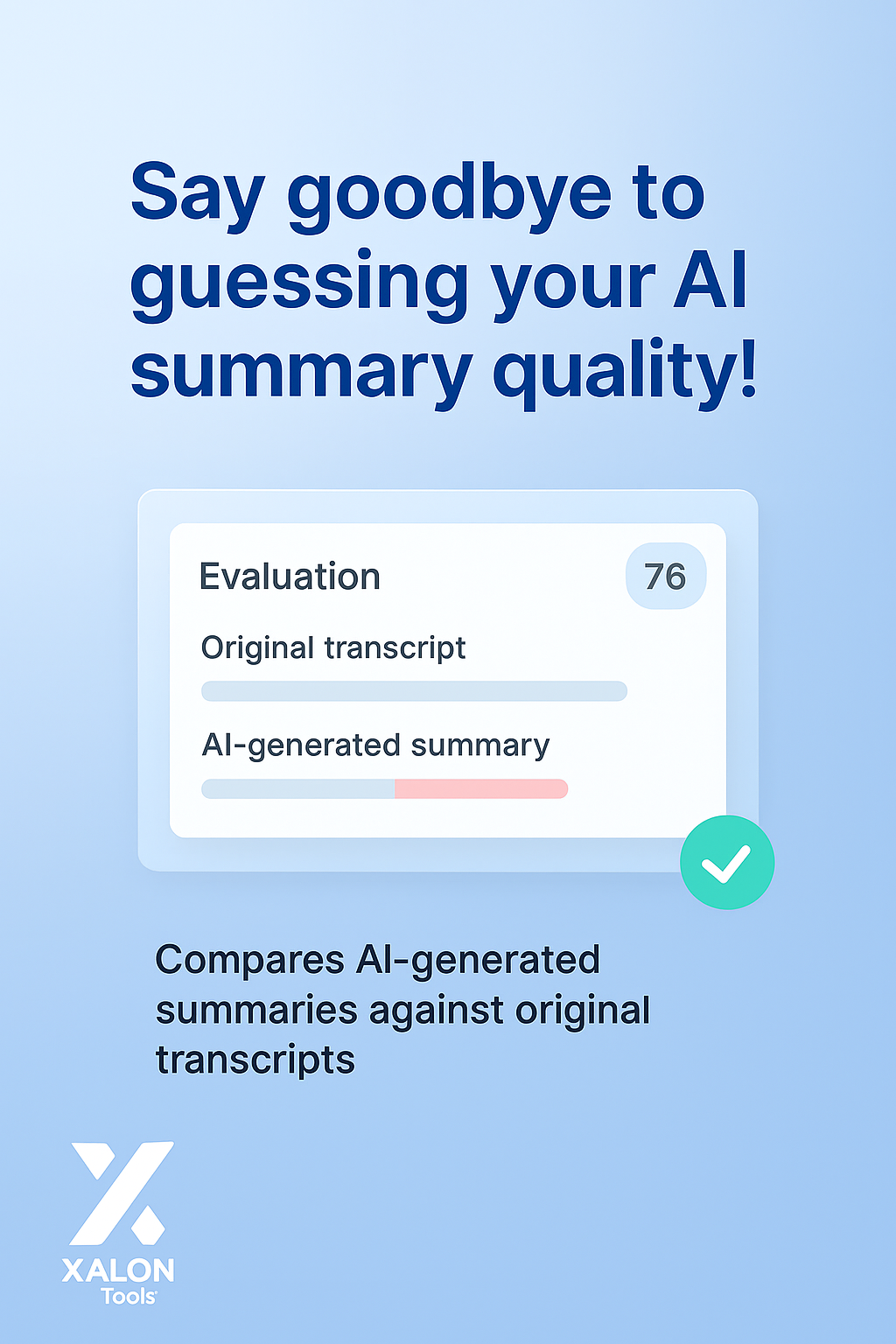

Say goodbye to guessing your AI summary quality!

This automation helps you evaluate the accuracy of AI-generated summaries by comparing them directly to the original transcript — flagging hallucinations and improving prompt design. Perfect for anyone building or testing summarization workflows.

Whether you're fine-tuning your prompts or benchmarking model outputs, this tool ensures your summaries stay true to the source.

What it does:

🧾 Compares AI-generated summaries against original transcripts

🧠 Flags content in summaries that doesn't appear in the source

📊 Scores summaries based on factual alignment and LLM adherence

📉 Highlights low-scoring results for prompt/model improvements

📁 Logs evaluations to Google Sheets for easy review and iteration

✅ Setup guide & sample evaluation sheet included

Need help setting it up? We offer full configuration, model/prompt testing, and accuracy optimization support.