XALON Tools™

Evaluation metric example: Check if tool was called

Evaluation metric example: Check if tool was called

Couldn't load pickup availability

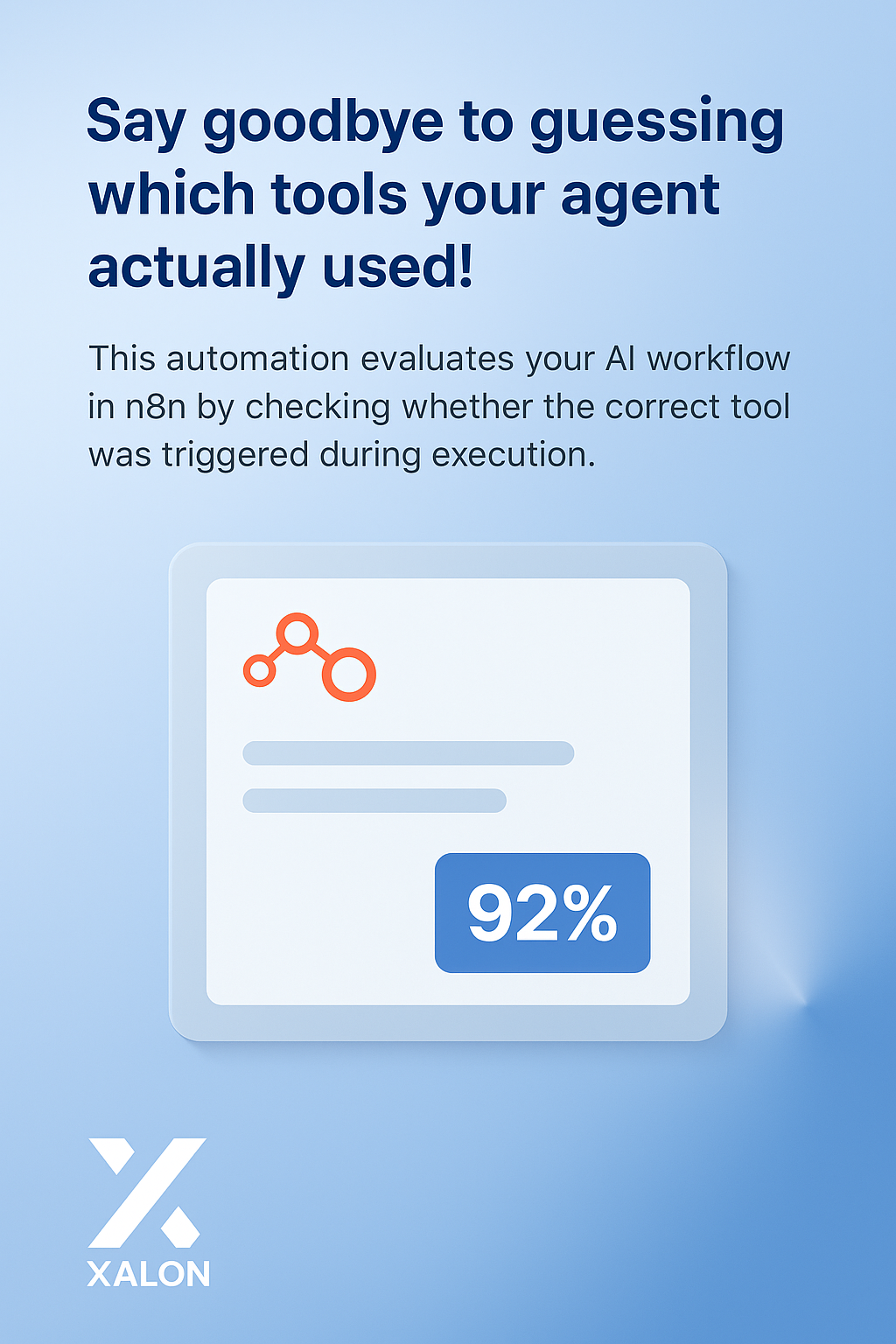

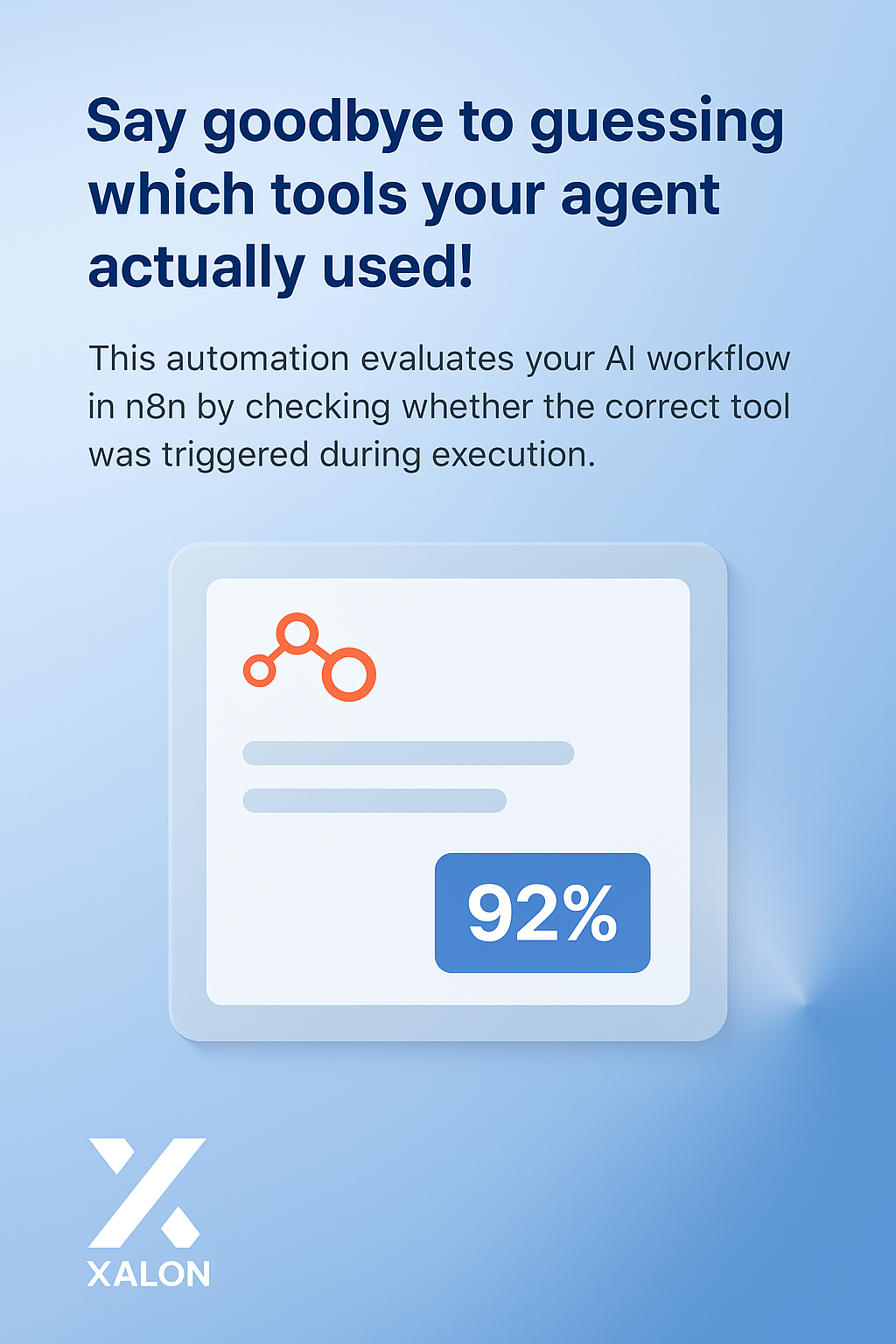

Say goodbye to guessing which tools your agent actually used!

This automation evaluates your AI workflow in n8n by checking whether the correct tool was triggered during execution. It compares the tools used by your agent with the expected tools from a dataset — giving you a clear performance score for each run.

Perfect for validating AI agent behavior in tool-using workflows like automation agents, copilots, or decision trees.

What it does:

🧪 Runs evaluation tests in parallel using a dedicated trigger

🧠 Captures the full list of tools the agent used during execution

🔍 Compares used tools against expected tools in your test dataset

📊 Sends a performance metric back to n8n so you can track accuracy

💰 Skips evaluation logic during standard runs to save resources

✅ Setup guide & importable automation included

Need help setting it up? We offer full configuration and testing for a one-time fee.